Still haven’t found what you’re looking for?

Written by • • 7 min read

AI-powered search platforms have arrived. Google’s Generative AI in Search, Perplexity, Bing Copilot, You.com, Arc Search, and ChatGPT are competing to harness the power of LLMs to make searches smarter and more precise.

So, what do LLMs mean for the future of search?

How it works

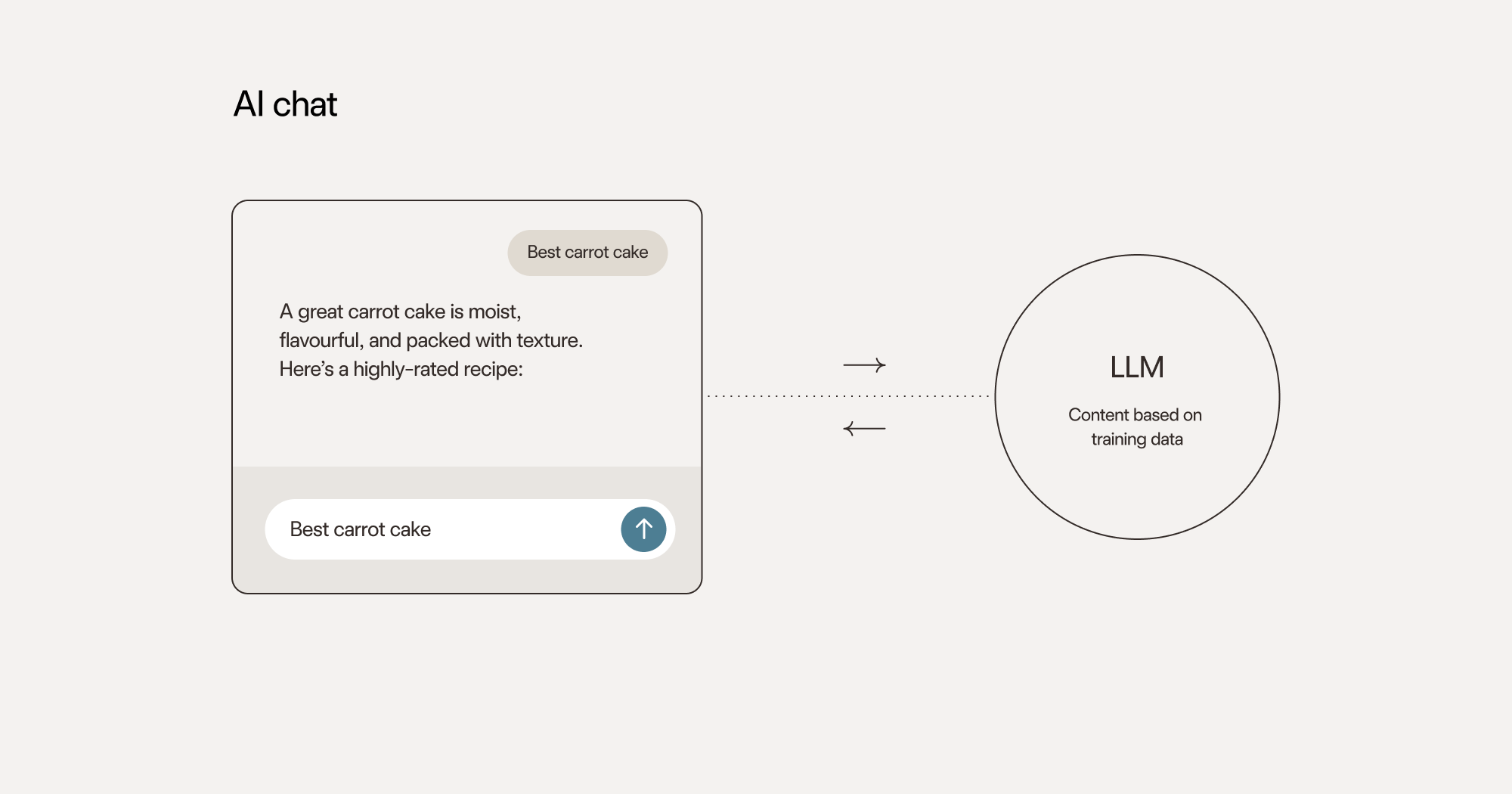

Asking a Large Language Model (LLM) for content is restricted by its training data, which may have a cut-off point. It’s also prone to inaccuracies as it doesn’t understand the true meaning of the words, just how they usually connect to each other.

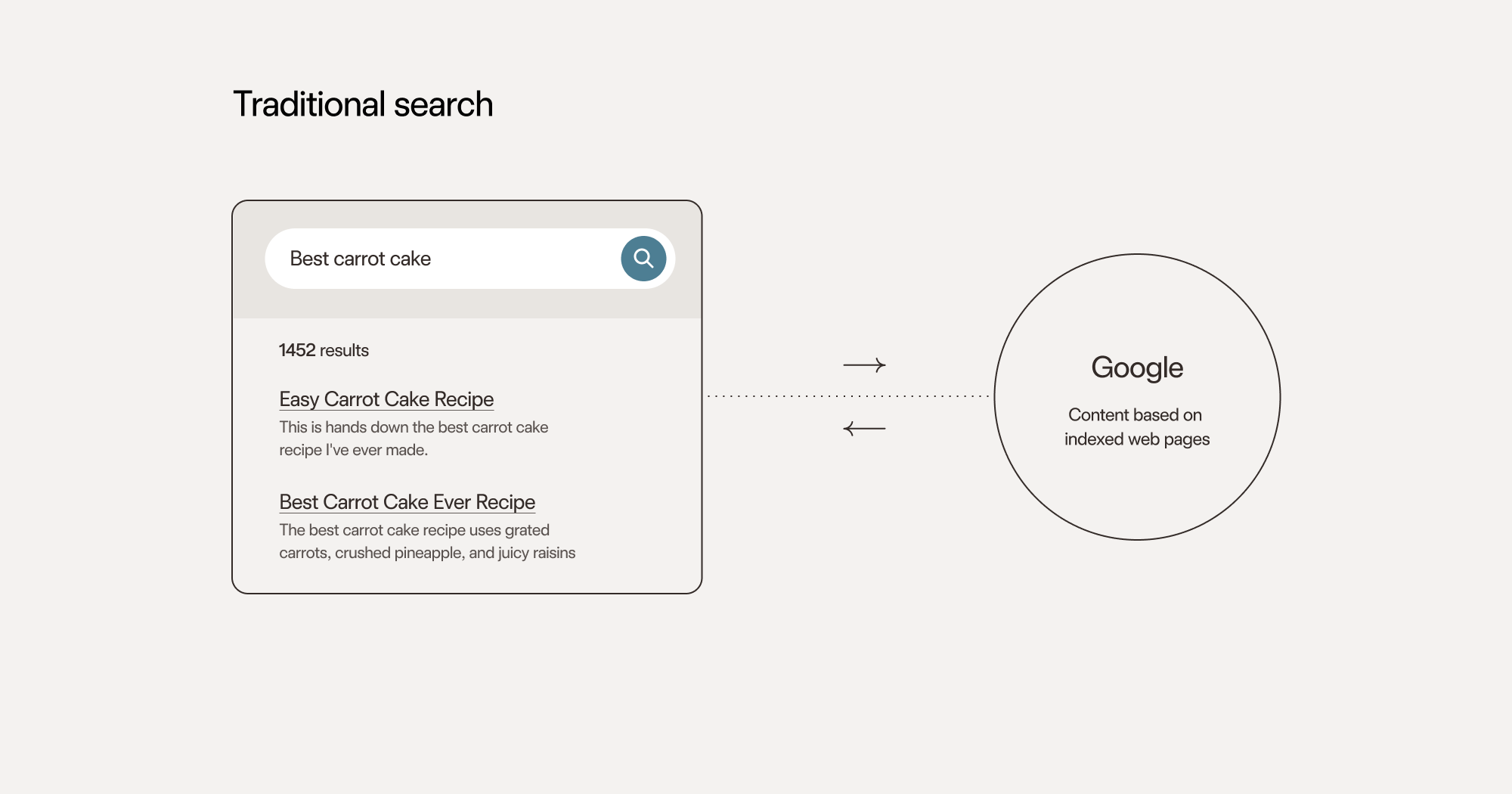

A traditional web search like Google can return many web pages, and it’s up to us to rifle through these results. It’s also our responsibility to make sure our search term is the best it can be to return fruitful results.

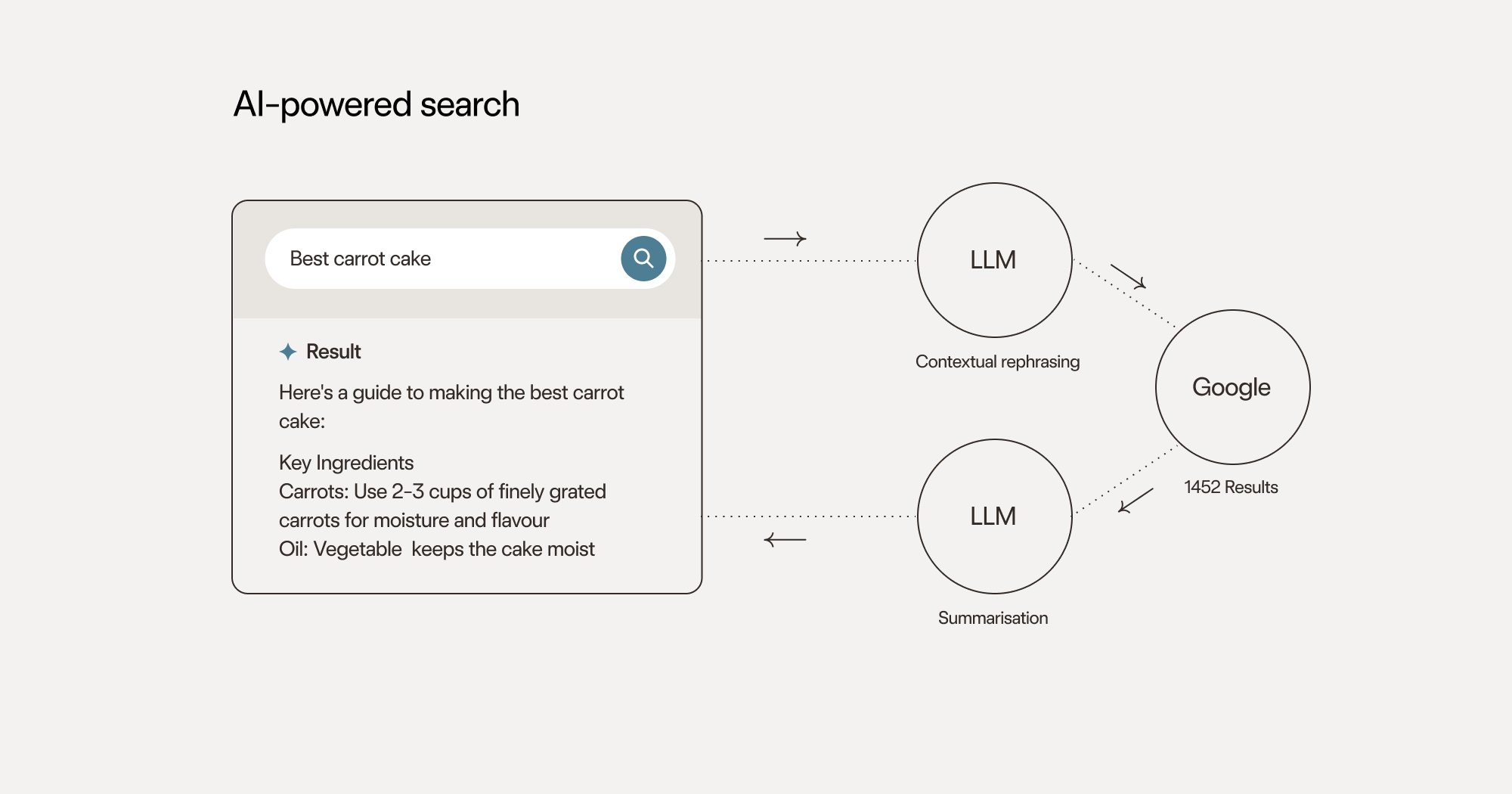

AI-powered search uses the LLM to rephrase your search term. It then runs real-time (traditional) web searches and augments the results with an LLM-powered response.

This concept is called retrieval-augmented generation (RAG) and addresses some of LLMs’ current limitations, such as their tendency to produce outdated or inaccurate responses, known as ‘hallucinations.’

These new search platforms can quickly find the relevant parts of 20 web pages in parallel instead of you reading each one. This can save hours of browsing time and produce more reliable results than LLMs’ internal training data.

Abundance

Do we need this? Did search engines fail? - Before I answer these questions, It’s worth taking a moment to understand how we got here.

In the early 2000s, there was a rise in self-publishing and individual presence fuelled by sites like MySpace, Facebook, Reddit, and YouTube. No longer was the Internet the domain of skilled webmasters, but anyone and everyone with something to share.

Twitter, Instagram, Medium, TikTok and Stack Overflow followed, propelling the explosion of content and the growth of the web as we know it today.

Today there are more than one billion websites and around 50 billion web pages. Here are a few dizzying facts:

- 2,500 years of video gets uploaded every month on YouTube

- There’s more than 665 years’ worth of music on Spotify

- Ten million new blog posts are published every day

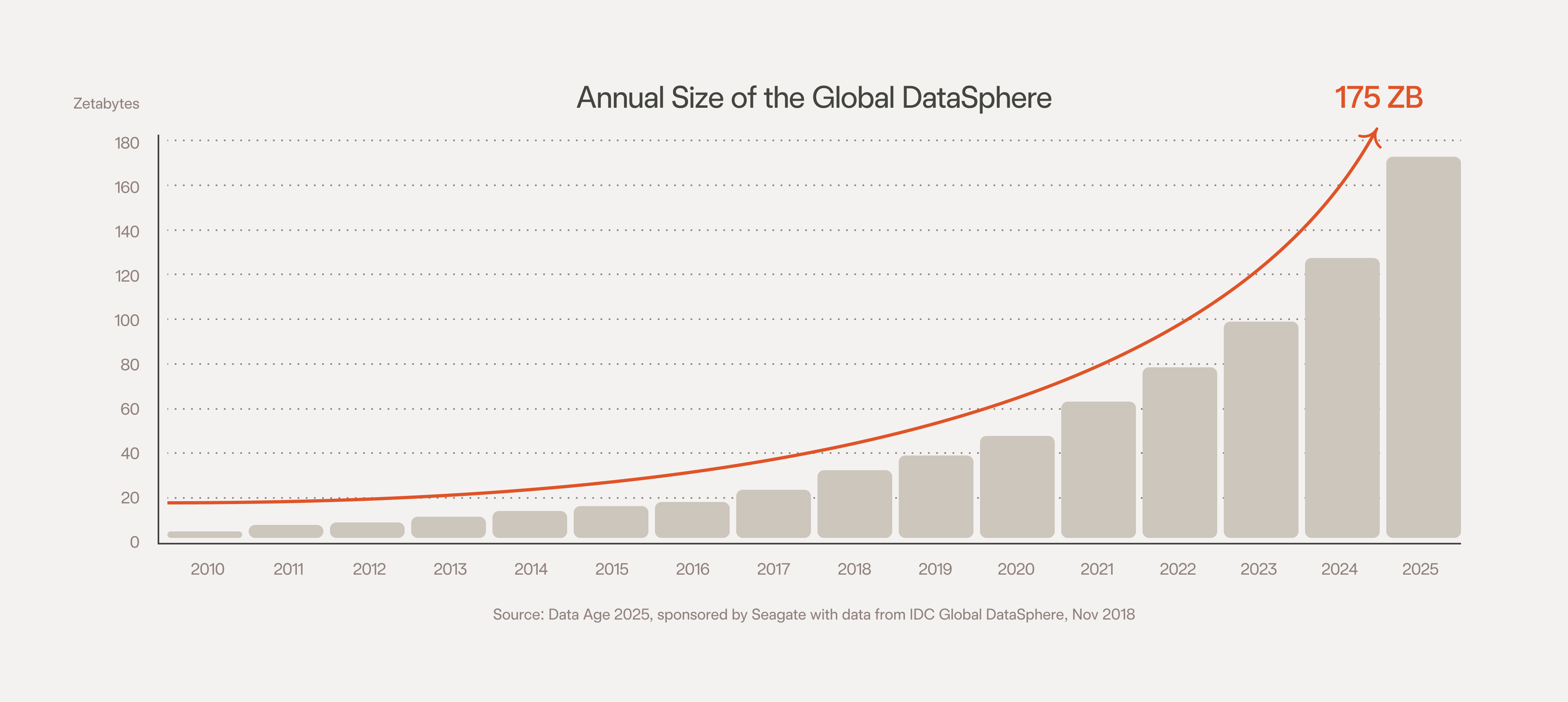

The global datasphere is now over 175 zettabytes (187,904,819,232 terabytes) — if this were a single video, it would take twice the universe’s age to watch.

But the Internet’s size isn’t a problem. In fact, it is one of its greatest strengths. Today, the internet is a huge wealth of human knowledge that keeps growing with little detriment to speed or access.

Unfortunately for us humans, our brains can’t keep up. We each have a finite amount of time and a limited rate of information absorption. We can only read, listen, or watch at a limited speed. Size is not the internet’s problem, it’s ours.

Over the years, we’ve had to develop many abundance-coping methods: Reviews and recommendations help us choose a movie, summaries help us decide whether to commit to a book, and a five-star review helps us choose a hotel or restaurant. We’ve honed our ways of being informed without investing large amounts of time.

Business model pollution

Search engines have done a great job of hoovering and indexing everything on the internet. But again, even their indexes are still too long for us mere finite-timers.

This is where the Internet starts to get a little messed up. Search engines became the primary source of finding content, so if you wanted your content to be found, you needed to play the SEO game and try to land your website on the first results page. This involved dark tricks within the code and the content. It also involved writing longer-form content and even more content to link to your original content to boost its position.

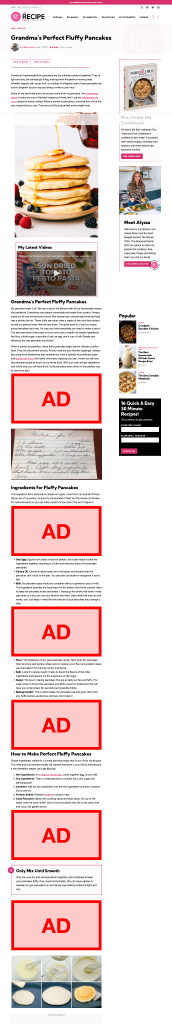

This problem only got worse when content creators could monetise their content through the introduction of ad networks (we’re looking at you, Google Ads). The longer a content creator could keep a visitor on their page, the more ads they can serve. The more ads they can squeeze in, the more money they make.

Eventually, content became obscured, and users had to scroll, expand, or paginate to find what they needed. A classic example of this is the humble recipe website.

Other distractions, such as cookie consents, newsletter sign-ups, and video ads, dilute the information pot and obscure our content.

We’ve buried our knowledge, and even though it’s part of a fantastic globally linked system, there was no easy way to pinpoint what we need efficiently. The business model motivates content creators to make it easy for their content to be found but increasingly difficult to read.

The birth of LLMs

Ironically, this immense growth of content on the Internet, fuelled by ad networks, is exactly what has enabled the training of the large language models that might kill off those ad networks! Ten years ago, this wouldn’t have been possible to the extent of current models. All of our knowledge and logic are encoded in the connections between words.

LLMs don’t think like human brains; they predict each word based on its relevance to previous words and the prompt’s structure.

Now, we can use LLMs to make sense of this garbled mess we’ve created online by finding key information (not just single pages) across multiple results and summarising them in a suitable way for the user—all in a matter of seconds.

How LLMs help us find content

- Infer

They rephrase your search term into something more relevant by understanding your context. - Filter

They cut through filler content and find relevant information. - Contextualise

They can find meaningful connections across multiple parallel searches. - Personalise

They can summarise content in context to the user. - Scrutinise

Follow-up questions about the results can be asked. - Correct

They increase accuracy by reducing hallucinations instead of relying on the LLM’s training data.

Platform risk

While the benefits are clear, some of these new search products tread a dangerous path. Under the hood, they rely on search engines like Google and Bing to provide search results while relying on LLMs from the likes of OpenAI. The new LLM-based Web Search start-ups are acting as the glue that brings these features to life, but they run a platform risk, making them vulnerable to Sherlocking by their providers (the introduction of a new feature that renders a third-party tool obsolete). Google’s move into this area signals an uneasy time for 3rd party AI-based search platforms.

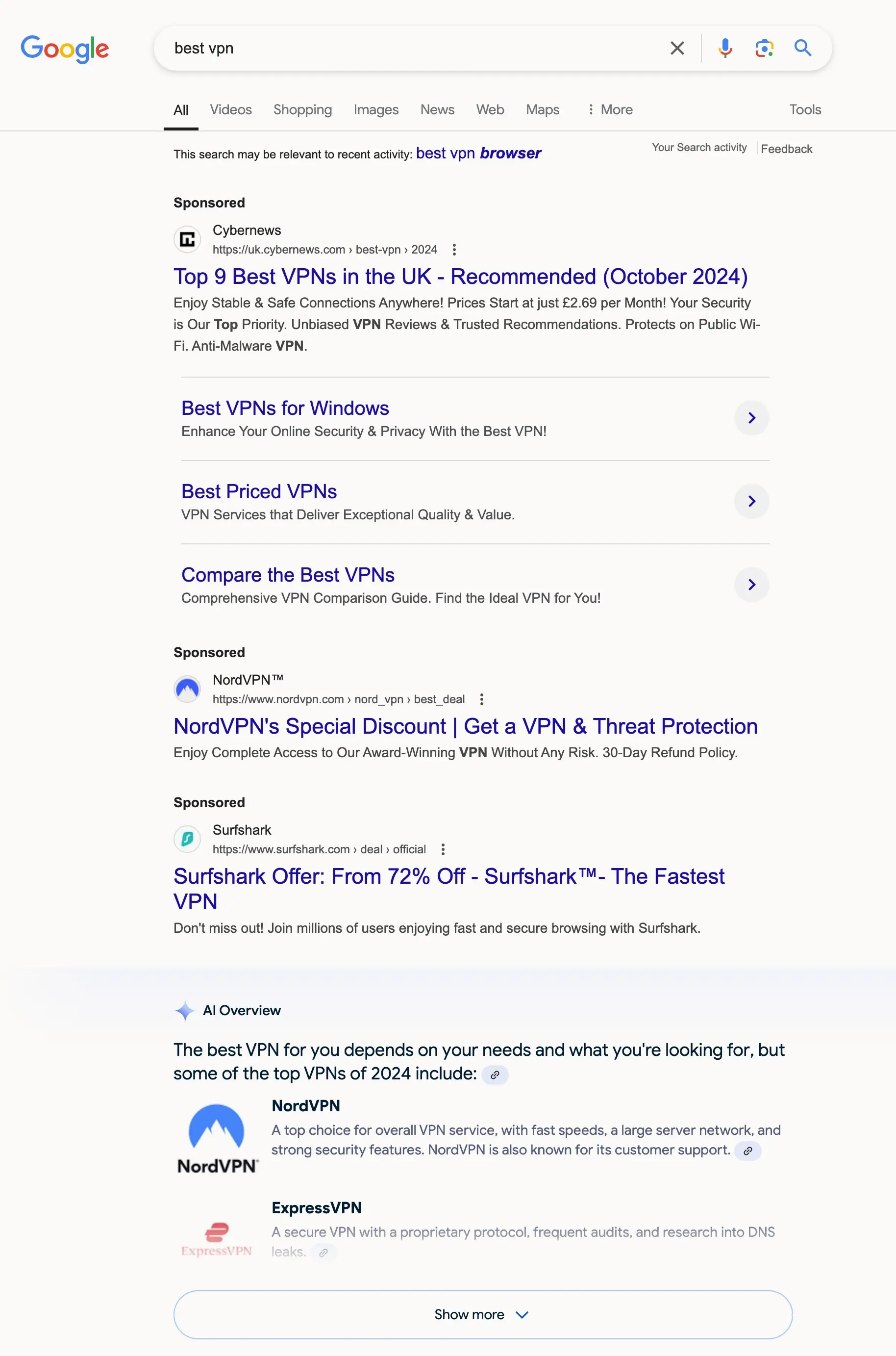

Google ought to be a long way out in front. It has an incredibly powerful model in Gemini, and the world’s information is indexed in its search engine. It trialled AI-based search in Search Labs for a while and has cautiously rolled it out to its users. So what could possibly be holding Google back? There is one giant hurdle for Google — ads.

The Google Ads conundrum

Google’s ad platform generated over $237bn in 2023. That’s nearly 80% of its revenue.

One of the huge benefits of AI-based search is getting to the point — finding the good stuff among a sea of SEO fluff and ads. If these services grow in popularity, fewer people will land on websites and see the ads that site owners, content creators and Google rely on for income.

Serving ads alongside AI-based search results also poses some thorny problems. Who gets that revenue? While LLM Web Search may serve results based on content from your webpage, searchers may not see ads from your original page, and other ads may be displayed, profiting from your content.

There is also the risk of ads being undermined by the returned content. For example, if you search for “the best x” the ads may recommend one service, but the LLMs response may also recommend against it.

"In this new generative experience, Search ads will continue to appear in dedicated ad slots throughout the page. And we’ll continue to uphold our commitment to ads transparency and making sure ads are distinguishable from organic search results." — Google

While new AI search platforms have the luxury of rethinking and reinventing the whole paradigm, Google will most likely be shackled to its current platform, opting to shoehorn AI alongside traditional results. Anything that disrupts its ad income will be approached with extreme caution.

The business model that built the Google empire is also a thorn in its side.

Future implications

So, how might AI affect the future search, ads, and SEO landscape? Here are a few provocations we’ve been discussing recently:

- Content creators lose income. Their content is delivered through LLM summaries, and credit may be lost.

- New SEO games will be played. Creators may discover new ways of bubbling their content to the top of AI search summaries, which may involve hiding instructions in page content.

- Data poisoning. Websites could embed malicious content to mislead LLM training data and responses.

- Paid ads evolve. Ad networks could partner with AI search and pay to steer LLM searches toward their customers.

- Profits are shared. Content creators could earn a share of AI search profits, while subscription income gets shared between those who are summarised the most.

- Fractured trust. Unreliable LLM results may force users to seek independent, quality journalism, reviews, and perspectives.

- More paywalls. News outlets, journalists, and content creators could opt out of AI search by hiding their content behind paywalls.

- New laws. Regional laws enforcing attribution and reward may force AI search to limit its operations in some countries.

These thoughts and implications highlight the need to be mindful about future applications of AI — something central to how we work at Normally, and necessary to ensure more intentional and relevant web experiences.

Traditional advertising networks must prepare for a potential challenge as they realise their content-hiding tactics have become detrimental to users seeking direct value. Now, the user stands to gain the most from AI search advancements.

About the Author

Nic Mulvaney is Director of Applied Technologies at Normally.