Putting children in charge of the world’s most powerful algorithm

Written by • • 5 min read

How we retrained TikTok’s algorithm for young people.

Children are regularly using the internet, but it has never been designed for them. While there are benefits to young people being online - communities, vast global knowledge, escapism - they have to be balanced against the dangers - harmful content, malicious individuals and relentless attention capture. As one parent put it “we don’t give our children access to the internet, we give the internet access to our children.”

When a child gets their own smartphone, they go from mostly supervised interactions online to near-limitless access. It’s like being scooped out of the local village and dropped into a vast global city - without the skills to navigate this change. This is exacerbated by the commercially-centred, attention-mining business models that fuel most digital products. You can read more about how we worked with young people and how best to research with children in another article here.

At Normally, we recognise that the relationship between young people and technology is complex. As we wrote in this article, all internet users are part of the attention economy, in which we pay for access with our eyeballs. The consequences are manifold and often affect young people disproportionately. The rise of AI potentially adds more fuel to the fire by amplifying harmful effects.

While it is tempting to simply take phones away from young people, this can also deprive them of access to their friends and all the benefits that the internet brings. Instead, how could we leverage AI to create safer, more positive online experiences for young users? Our blend of tech and design skills means we can rapidly design prototypes to respond to complex human issues - without getting stuck in theory. This means we can translate insights into a working, coded product without losing sight of user experience throughout.

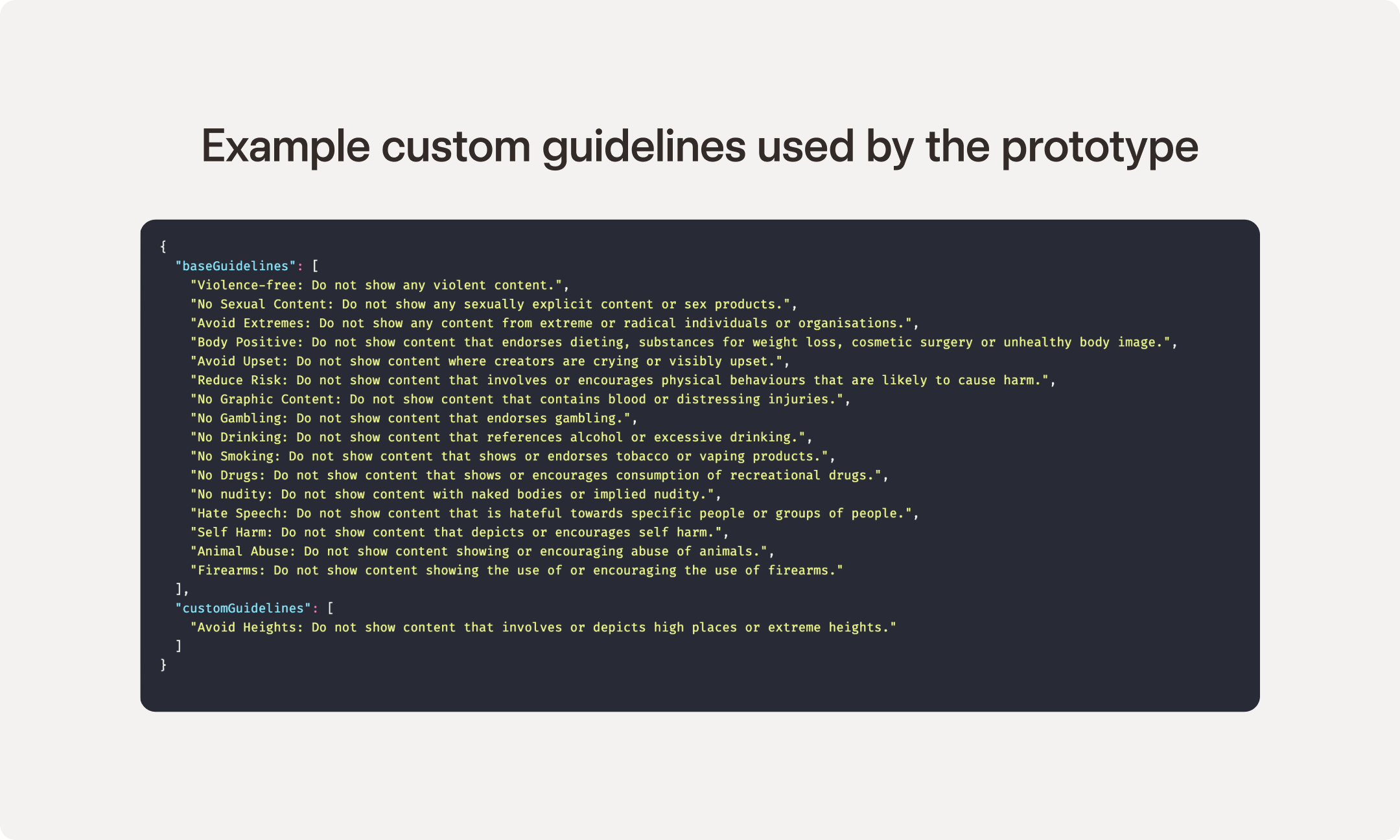

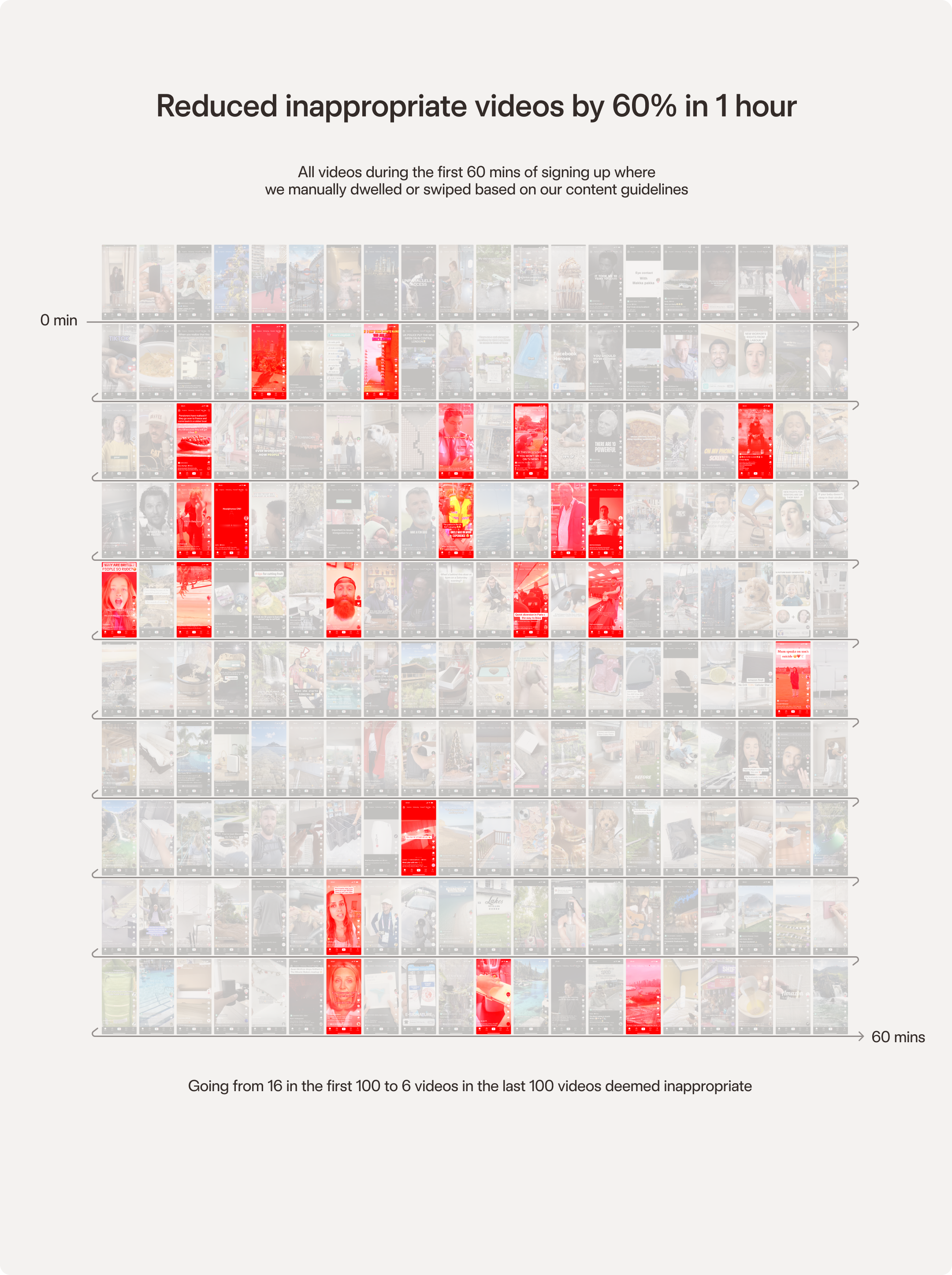

As a response to the challenges created by more and more young people being online, we created a digital tool that helps families align existing social media platforms to their personal and family values. We retrained a user’s TikTok algorithm using a prototype browser extension that automates the swiping of TikTok videos. This is driven by our safeguarding guidelines based on age and what parents and young people want to see.

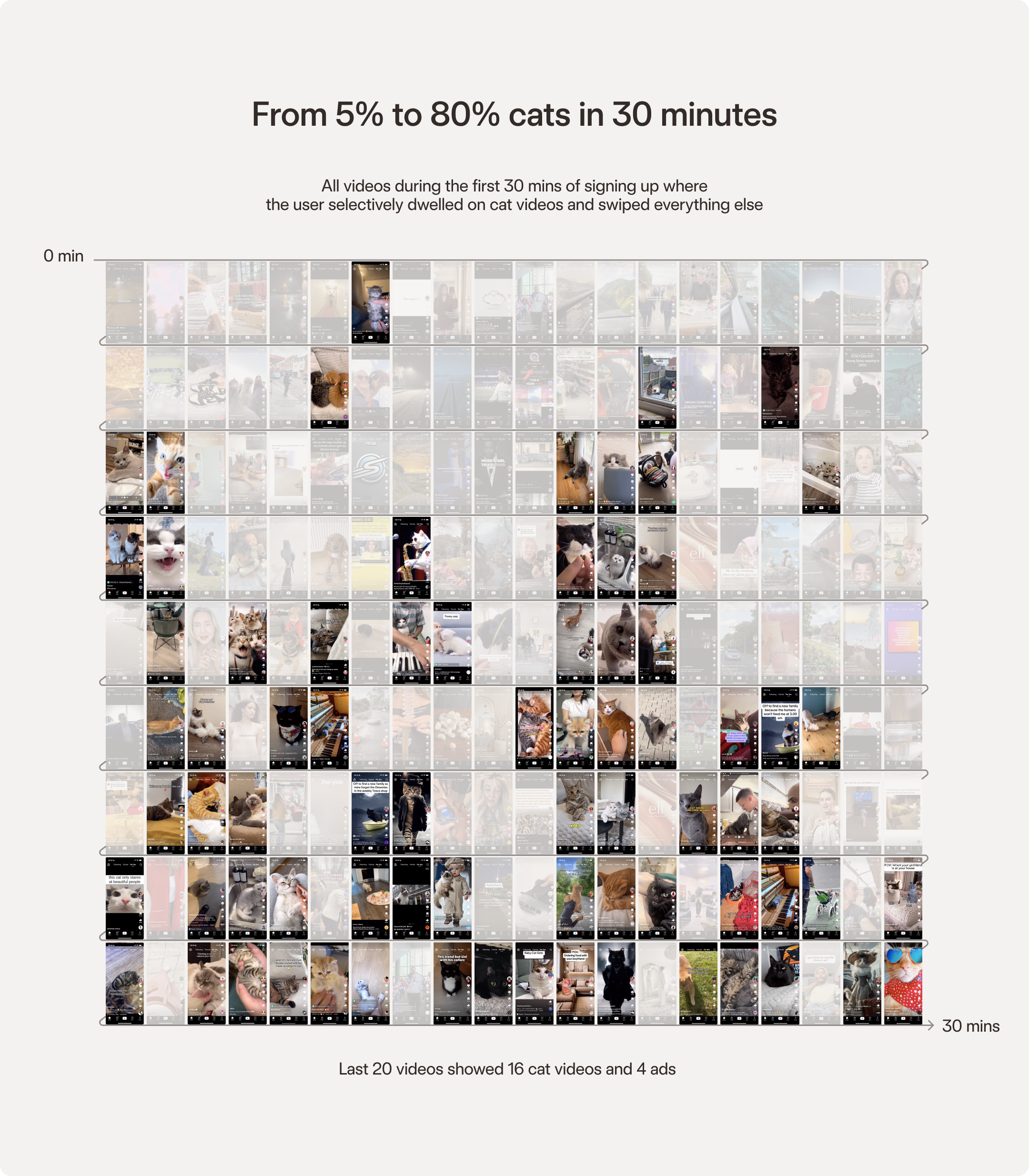

Our theory was that we could take advantage of TikTok’s incredibly powerful algorithm and retrain it to promote user safety. We ran experiments to test out how effective view time is at influencing the TikTok content feed. Below, you can see the results of our deliberate attempts to promote cat content over a 30-minute session. After half an hour of automated training, 80% of the feed was turned into cat videos, while the remaining 20% were ads. The cat content was promoted simply by spending time viewing any cat videos that organically surfaced on the feed.

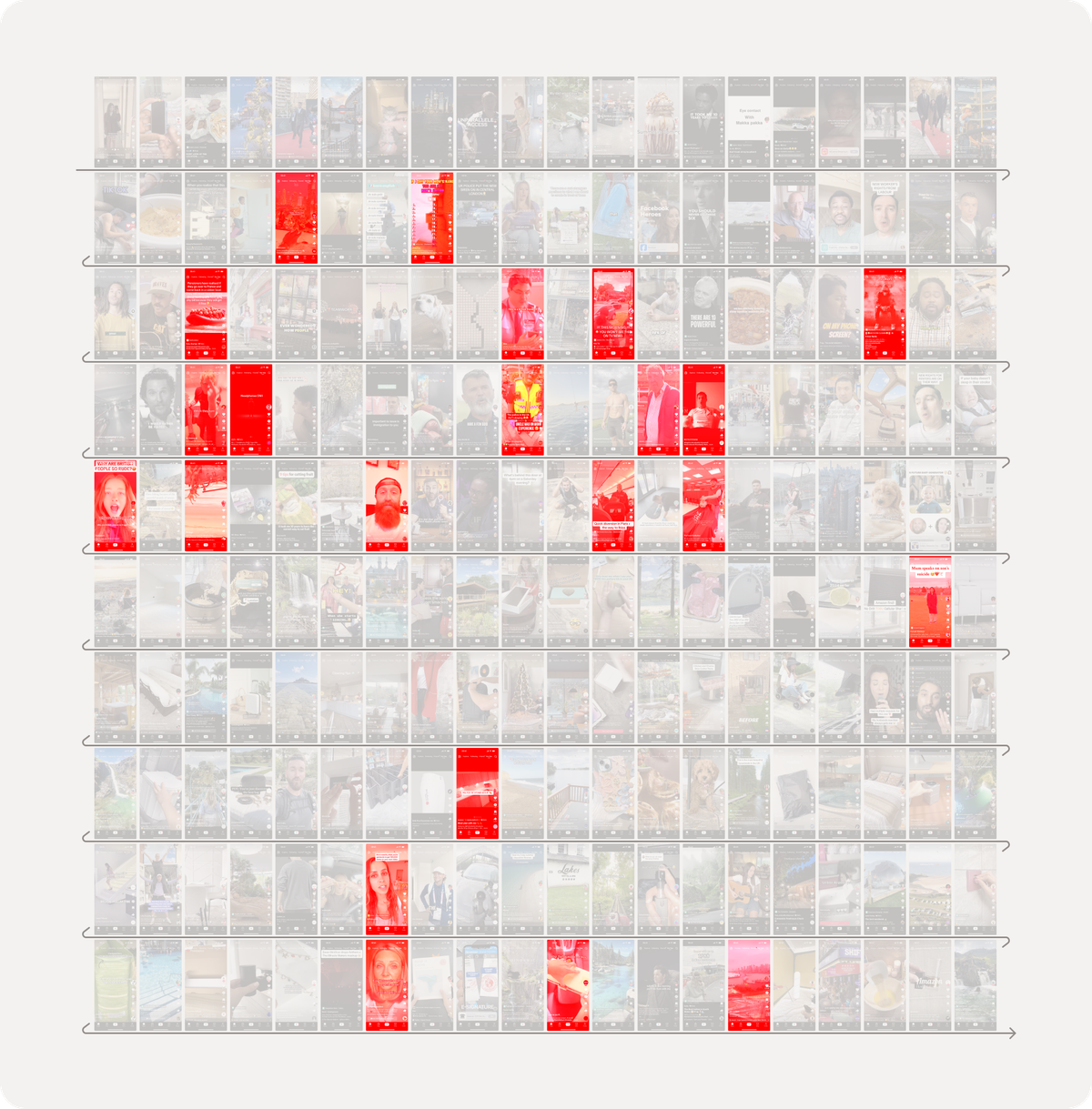

This experiment showed us that view time is effective at manipulating the TikTok feed based on just one preference. Next, we set about refining the content feed based on 18 different safety preferences (violence, strong language, extreme views, etc.).

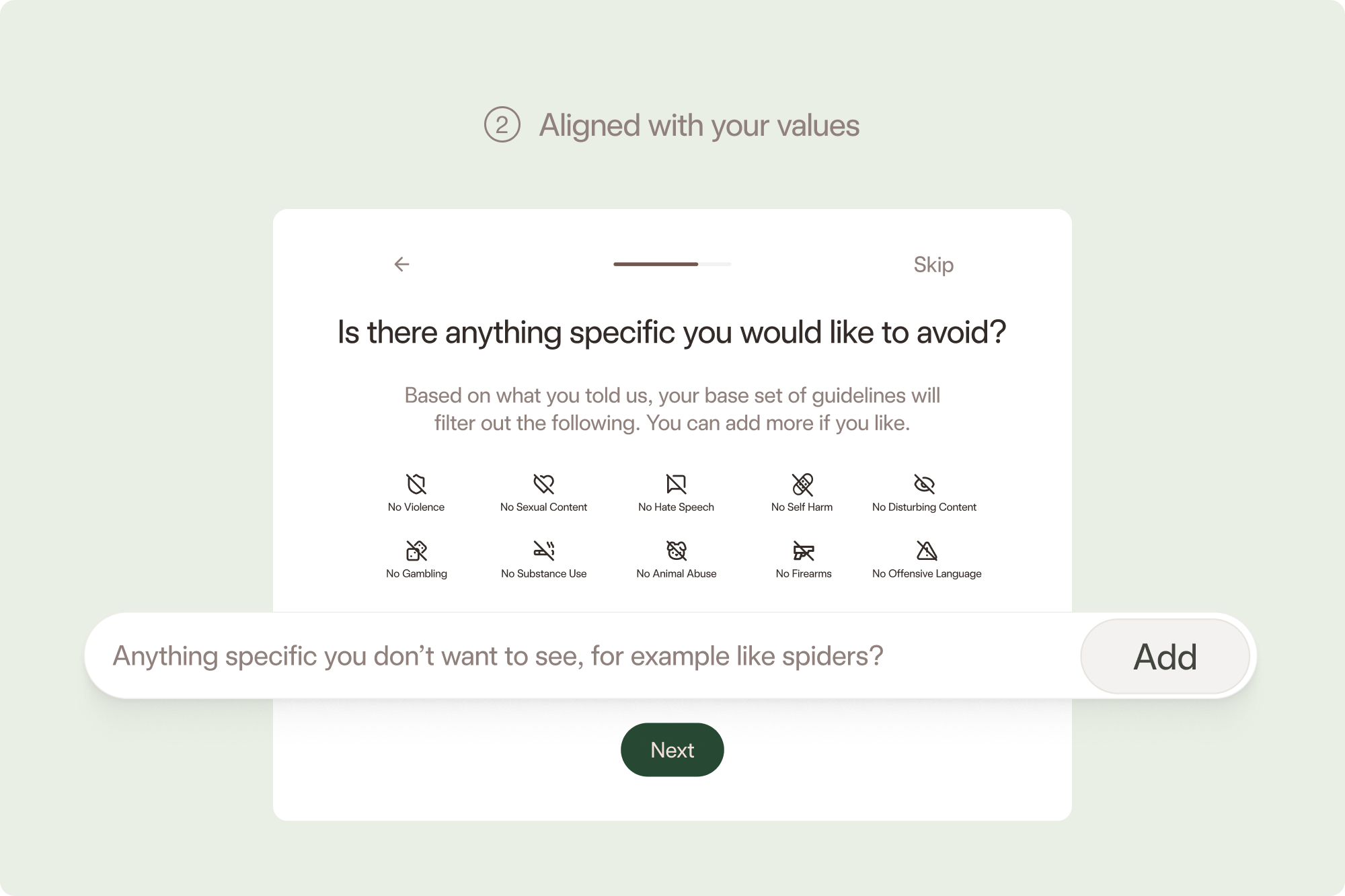

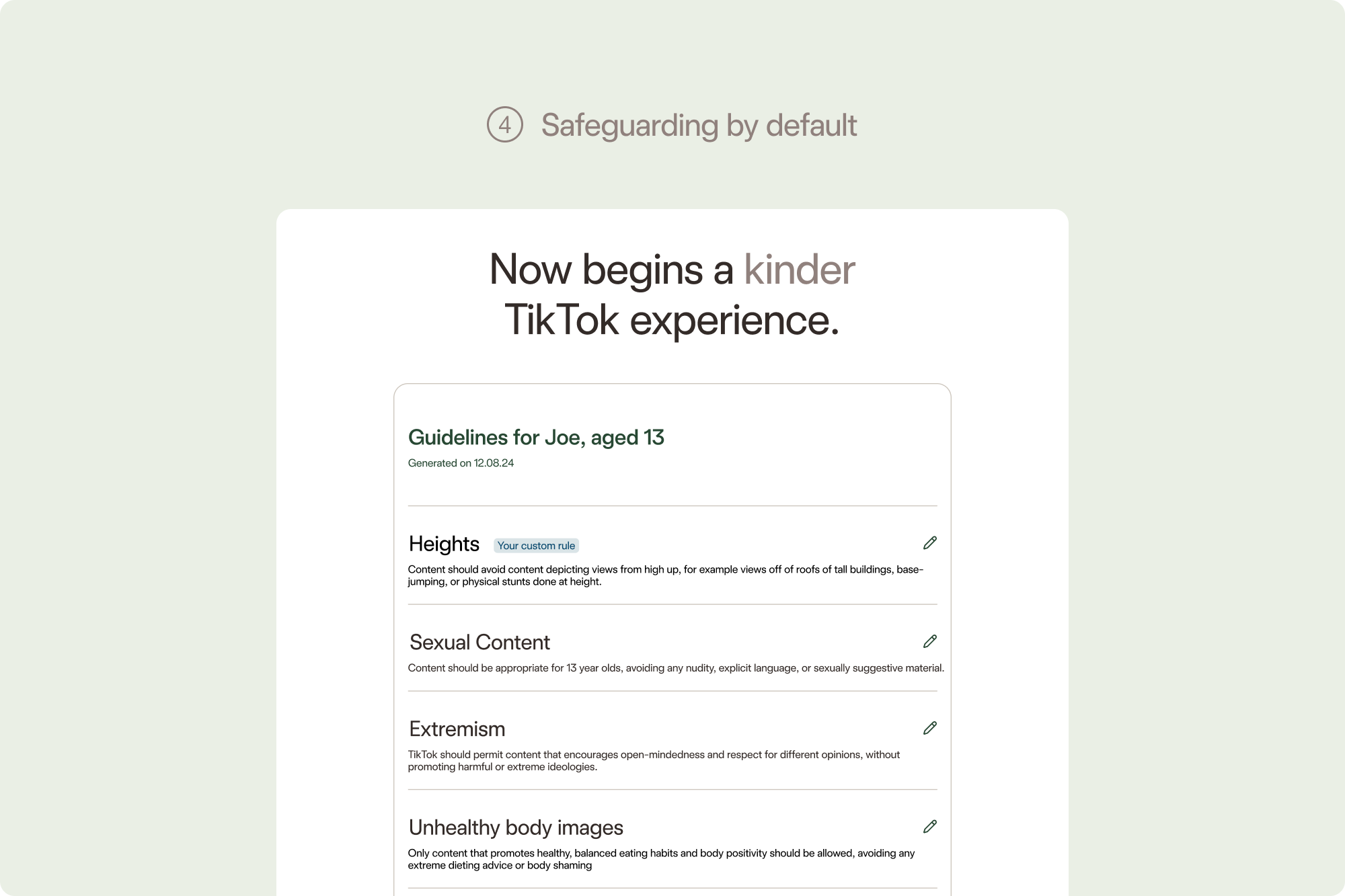

Our training tool uses view time to curate a TikTok feed, optimising the content recommendation algorithm to ensure that the content shared is appropriate and aligns with both the parent's and child's values. Users can create a personalised set of content guidelines based on age and individual preferences. The training tool applies the guidelines to the user’s TikTok feed, performing automatic swiping and viewing, which curates the content shown.

Our tool begins to address key insights we came across in our research:

Pre-existing inequalities can exacerbate young people’s vulnerability online; time-poor and/ or less tech-savvy guardians face bigger challenges in discussing and planning how their children behave online. These factors lead to a safety gap between families who are in a place to actively manage the dangers of the internet for their children and those who are not.

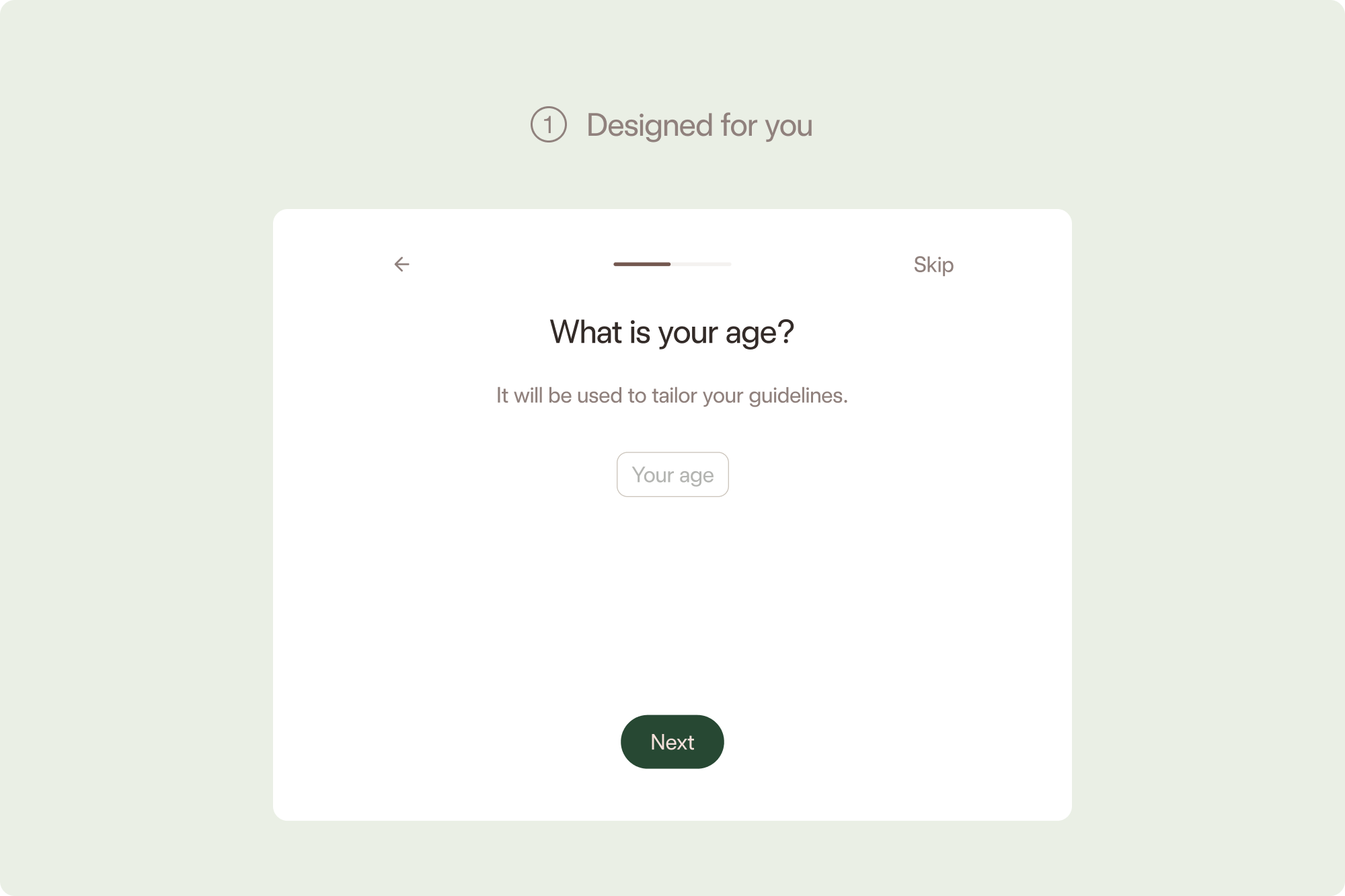

We carefully designed our prototype with these considerations in mind: onboarding is through natural language, which makes it accessible to those with lower tech knowledge. This tool alone won’t level out existing inequalities in social media literacy, but it is a step in the right direction.

Flow we designed for helping parents and children setup their own guidelines

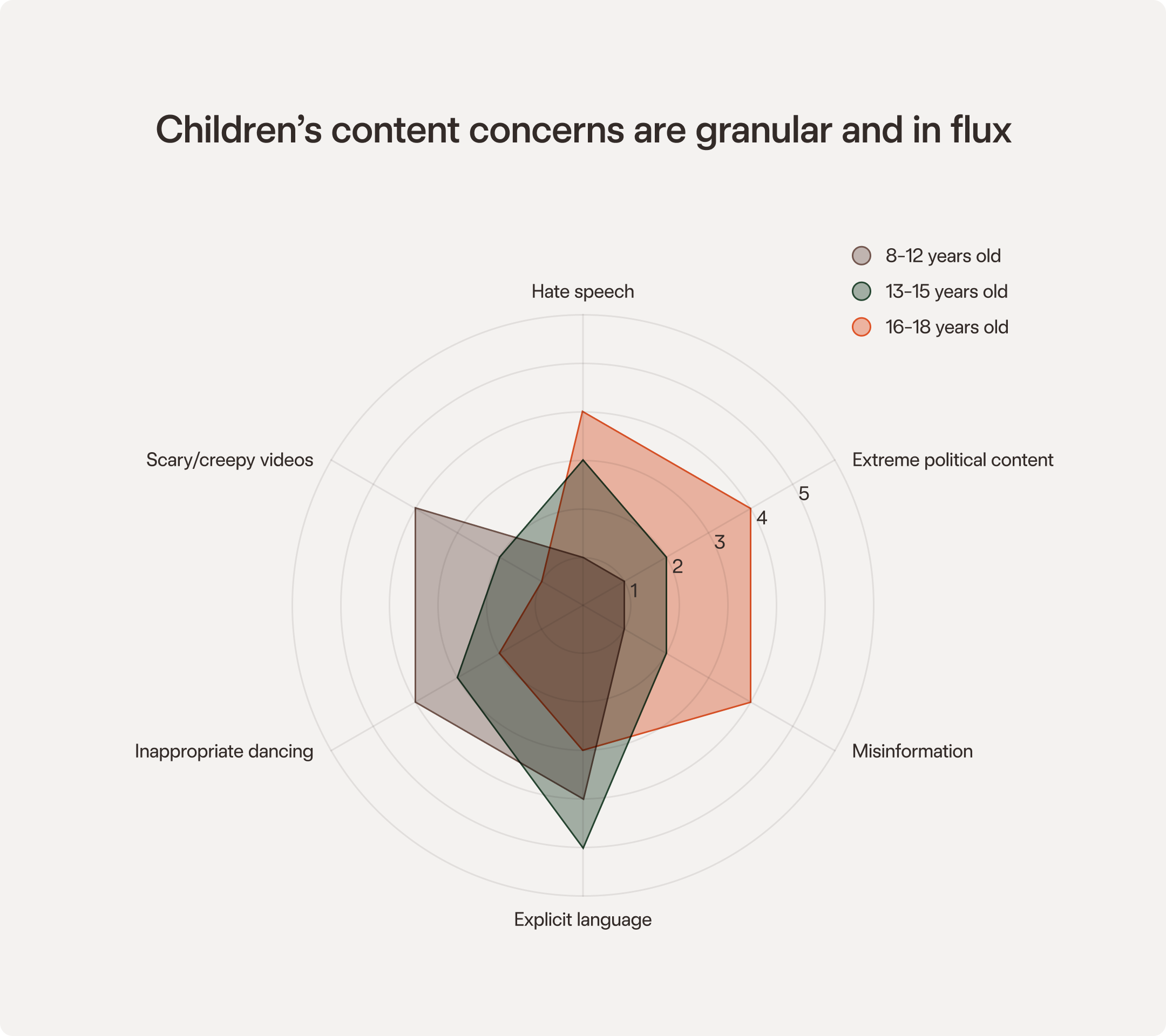

Safety features are present within many digital services that young people use, but they are not widely known about or used. They are often hidden deep in the app’s architecture, where they are unlikely to be found. Young people's content concerns are far more nuanced than social media’s guidelines would suggest. Their preferences are also in constant flux, growing and adapting with them - one size does not fit all. As a parent, who is also a director at the European AI and Society Fund, said “I can completely sympathise with families who do not have the bandwidth to fight their way through this labyrinth and end up handing over devices with insufficient safeguards on them.”

Our TikTok training tool allows young people and their families to create tailored guidelines that can evolve and shift as they grow and their tastes change.

The current discourse on young people’s internet use is disproportionately focused on the usage side - the onus is on families and young people themselves to come up with ways to keep safe online. We need to shift the responsibility to keep young people safe back on to the creators of digital services.

After a user has chosen their guidelines, our prototype takes care of the rest; it controls the content experience so that users do not have to.

The landscape around children and technology is shifting and developing rapidly. Children in the UK are online from an increasingly young age, and screen time is on the rise. 98% of young people between the ages of 12 and 15 have their own smartphone.1 The exact relationship between internet use and young people’s mental health is up for debate, but it is safe to say that the level of power that internet-enabled media exerts on its users is different from more traditional media forms. Social media apps like YouTube and TikTok, in particular, are designed by experts to be as addictive and attention-sapping as possible.

We will continue to investigate this area and apply our blend of tech and design skills to test out appropriate responses. We will also continue to develop the prototype, looking at how it can be adapted to apply an organisation’s safeguarding rules to their content, among other things. If you or your organisation is interested in leveraging technology to make safer and more enjoyable experiences for young people, please get in touch at hello@normally.com.

References

Five things to consider when buying your child their first mobile phone https://www.bbc.co.uk/bitesize/articles/z8rk239